The Evolution of Data Architecture | Everything You Need to Know About Modern Data Architecture.

From the humble beginnings of rigid hierarchies to the soaring heights of modern cloud-native solutions, data architecture has come a long way.

The evolution of data architecture is a fascinating journey that mirrors the technological advancements and changing business landscapes over the decades.

In the early days, data architecture resembled a fortress, with rigid hierarchies and centralized systems standing as its sturdy walls. Organizations relied on on-premises infrastructure and relational databases to manage their data, with access tightly controlled and innovation stifled by the limitations of the systems in place.

But as the digital landscape evolved, so too did the demands placed on data architecture. The rise of the internet, social media, and mobile technologies ushered in an era of unprecedented data growth and complexity. Traditional systems buckled under the weight of this deluge, prompting a shift towards more agile, scalable solutions.

Ye olde data architecture (Late 1980s)

In the late 1980s, data architecture was characterized by rigid hierarchies and centralized systems. Organizations relied on on-premises mainframe computers and relational databases to manage their data.

Data was predominantly structured in a hierarchical or relational manner, emphasizing transaction processing and data consistency. This era saw the emergence of data warehouses, where organizations consolidated their operational data for reporting and analysis purposes.

However, data integration was a manual and labor-intensive process, requiring specialized skills to perform ETL (Extract, Transform, Load) operations. The focus was on optimizing storage efficiency and maintaining data integrity within the confines of traditional relational databases.

Transition to Big Data and distributed systems (2010s)

By 2011, the data landscape had undergone a seismic shift with the advent of Big Data and distributed systems. The exponential growth of data volume, variety, and velocity fueled the need for new approaches to data management and analysis.

Traditional relational databases struggled to cope with the scale and diversity of data generated by social media, e-commerce, and IoT devices. This led to the rise of distributed computing frameworks like Hadoop and Spark, which enabled parallel processing of massive datasets across clusters of commodity hardware.

Organizations embraced the concept of data lakes, leveraging scalable storage solutions to capture and store raw, unstructured data from various sources. The focus shifted towards flexible and agile data architectures capable of accommodating diverse data types and supporting advanced analytics use cases.

Modern data architecture – The Data Lakehouse (2020s)

Fast forward to 2024, and we find ourselves in the era of the data lakehouse – a unified architecture that combines the best of data lakes and data warehouses. Cloud-native architectures have become the norm, offering scalability, elasticity, and cost-efficiency compared to traditional on-premises infrastructure.

This modern approach offers organizations unparalleled flexibility, scalability, and performance for their data management and analytics needs.

Organizations are increasingly adopting microservices-based architectures, containerization, and serverless computing for building data-intensive applications that can adapt to changing business requirements.

Data engineering has become more agile and automated, with the widespread adoption of DevOps practices and technologies like Kubernetes for orchestrating containerized workloads. Data governance has evolved into a strategic imperative, with regulatory compliance (e.g., GDPR, CCPA) and data privacy taking center stage.

Modern data governance solutions leverage AI and automation to ensure data quality, lineage, and compliance across hybrid and multi-cloud environments. Real-time analytics and decision-making are becoming standard capabilities, enabled by technologies such as stream processing and in-memory databases.

A closer look at how the modern data architecture functions

The modern data architecture landscape in the 2020s is characterized by agility, scalability, and intelligence, allowing organizations to access the full potential of their data assets.

Structured, unstructured, and semi-structured data integration

The data lakehouse architecture accommodates diverse data types, including structured, unstructured, and semi-structured data, within a single unified platform. This enables organizations to ingest, store, and process data from various sources, ranging from traditional databases to streaming sources and IoT devices.

Unified Processing for Business Intelligence (BI), streaming analytics, data science, and Machine Learning (ML)

Data within the lakehouse undergoes unified processing pipelines, where it is transformed and enriched to support a wide range of analytics use cases. This includes traditional BI reporting, real-time streaming analytics, advanced data science, and machine learning applications.

Organizations can derive actionable insights from their data in near real-time by leveraging a common data processing framework, driving informed decision-making and business innovation.

Business Intelligence (BI) and reporting

The data processed within the lakehouse powers sophisticated BI and reporting solutions, providing stakeholders with interactive dashboards and visualizations to monitor KPIs, track performance, and uncover trends.

Advanced analytics capabilities, such as predictive modeling and prescriptive analytics, enable organizations to forecast future outcomes and optimize business processes.

Real-time streaming analytics

The lakehouse architecture facilitates real-time streaming analytics, allowing organizations to analyze and act upon data as it is generated. This enables proactive decision-making, event detection, and anomaly detection in high-velocity data streams, empowering businesses to respond rapidly to changing market conditions and customer needs.

Data science and Machine Learning (ML)

Data scientists and ML engineers leverage the rich and diverse data available within the lakehouse to develop and deploy advanced analytics models.

Whether it’s building recommendation engines, predictive maintenance algorithms, or fraud detection systems, the lakehouse provides a unified platform for experimentation, model training, and deployment at scale.

Continuous improvement and iteration

One of the key advantages of the lakehouse architecture is its ability to support continuous improvement and iteration. Organizations can refine their data pipelines, analytics models, and business processes based on ongoing feedback and insights derived from data analysis.

This iterative approach fosters innovation and agility, enabling organizations to stay ahead in today’s rapidly evolving business landscape.

Why Modern Data Architecture Matters

The importance of modern data architecture cannot be overstated, especially in today’s hyper-connected and data-driven world. It serves as the foundation upon which organizations build their data infrastructure, enabling them to unlock the full potential of their data assets and drive business success.

1. Make informed decisions based on data-driven insights

By integrating diverse data sources and implementing advanced analytics capabilities, businesses can gain a comprehensive understanding of their operations, customer behavior, and market trends.

2. Agility and scalability – the essential attributes of successful data architecture

Modern data architectures leverage cloud-native technologies and scalable infrastructure, allowing organizations to adapt to changing requirements and scale their data processing capabilities on-demand. This agility enables rapid experimentation, innovation, and time-to-market for new products and services.

3. Cost efficiency

Traditional data architectures often suffer from high upfront costs and ongoing maintenance expenses. Modern data architecture, on the other hand, offers cost-efficient solutions by leveraging cloud computing, open-source technologies, and pay-as-you-go pricing models.

4. Regulatory compliance and data security

Modern data architecture incorporates robust security and governance controls, including encryption, access controls, and audit trails, to protect sensitive data and ensure compliance with regulatory requirements such as GDPR, CCPA, and HIPAA.

5. Innovation and competitive advantage

By harnessing the power of advanced analytics, machine learning, and artificial intelligence, organizations can uncover hidden patterns, anticipate market trends, and capitalize on new opportunities.

Whether it’s optimizing business processes, personalizing customer experiences, or developing new products and services, modern data architecture provides the foundation for innovation and growth in the digital age.

The advantages of modern data architecture

As mentioned previously, modern data architecture stands as a beacon of innovation and efficiency, offering a plethora of benefits that empower organizations to extract maximum value from their data assets. Let’s explore some of them.

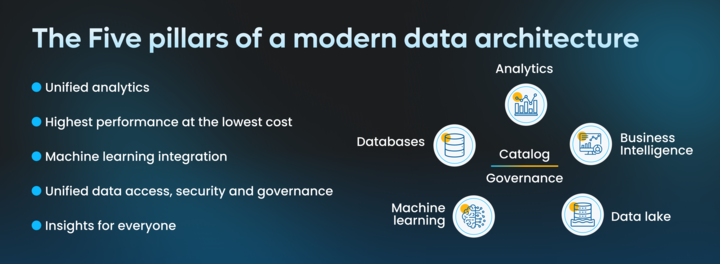

Unified analytics

Imagine having all your data – structured, unstructured, and semi-structured – sitting in one place, accessible and ready for analysis. That’s the beauty of modern data architecture.

With unified analytics, organizations can break down data silos and gain comprehensive insights across all facets of their business operations.

Whether it’s tracking sales performance, analyzing customer sentiment from social media, or predicting market trends, unified analytics enable holistic decision-making based on a complete view of the data landscape.

Highest performance at the lowest cost

Gone are the days of compromising between performance and cost. Modern data architecture leverages cloud-native technologies and scalable infrastructure to deliver unparalleled performance at an affordable price point.

By using distributed computing and parallel processing, organizations can process massive volumes of data with lightning-fast speed, all while optimizing resource utilization to minimize costs. This means faster insights and better ROI on your data investments.

Machine Learning integration

Whether it’s automating repetitive tasks, identifying patterns in customer behavior, or predicting equipment failures before they occur, ML algorithms can supercharge decision-making and drive business outcomes.

Unified data access

Modern data architecture breaks down barriers between disparate data sources, providing a single point of access for all your data needs.

Whether it’s structured data stored in a traditional database, unstructured data residing in a data lake, or real-time streaming data from IoT devices, users can access and analyze data seamlessly across the entire organization.

This democratization of data empowers teams to make informed decisions based on a unified understanding of the business landscape.

Security & governance

Modern data architecture prioritizes robust security and governance controls to protect sensitive data and ensure compliance with regulatory requirements.

From encryption and access controls to audit trails and data lineage tracking, organizations can enforce strict security policies and maintain data integrity throughout the data lifecycle.

By instilling trust and confidence in the data ecosystem, organizations can mitigate risks and safeguard against potential threats.

Insights for everyone

Last but not least, modern data architecture democratizes insights, making data-driven decision-making accessible to everyone within the organization.

Whether you’re a business analyst, data scientist, or frontline employee, you have the tools and resources at your fingertips to derive actionable insights from data.

Interactive dashboards, self-service analytics tools, and natural language processing capabilities empower users to explore data, ask questions, and uncover insights without relying on IT or data specialists.

The need to adopt a modern data architecture cannot be overstated

The adoption of a modern architecture isn’t just a technological upgrade – it’s a fundamental shift in mindset and approach.

For those companies willing to take the leap, the rewards are boundless.

It’s not just about keeping up with the latest trends – it’s about setting the stage for long-term success and resilience in the rapidly changing digital ecosystem.

A Quick Glossary

Data Architecture – The design and organization of data systems, including databases, data warehouses, and data lakes, to facilitate efficient data storage, retrieval, and analysis.

Rigid Hierarchies – Refers to traditional, inflexible data structures characterized by centralized systems and strict organizational hierarchies.

Cloud-Native Solutions – Applications and services designed specifically to run in cloud computing environments, leveraging cloud-native technologies such as containers, microservices, and serverless computing.

Relational Databases – A type of database that organizes data into tables with rows and columns, and enforces relationships between them, typically using Structured Query Language (SQL) for querying and manipulation.

Data Lake – A centralized repository that stores large volumes of raw, unstructured, or semi-structured data from various sources, providing a platform for advanced analytics and data exploration.

Cloud Computing – The delivery of computing services—including servers, storage, databases, networking, software, and analytics—over the internet (the cloud) to offer faster innovation, flexible resources, and economies of scale.

Agile – A methodology for software development characterized by iterative, incremental approaches and collaboration between self-organizing, cross-functional teams.

Scalability – The ability of a system to handle growing amounts of work or data by adding resources or nodes to accommodate increased demand without compromising performance.

ETL (Extract, Transform, Load) – A process of extracting data from various sources, transforming it into a standardized format, and loading it into a target system, typically used for data integration and migration.

Machine Learning (ML) – A subset of artificial intelligence that enables systems to learn from data, identify patterns, and make decisions without explicit programming.

Artificial Intelligence (AI) – The simulation of human intelligence processes by machines, including learning, reasoning, problem-solving, perception, and language understanding.

Real-Time Analytics – The analysis of data as it is generated or received, enabling immediate insights and decision-making based on up-to-date information.

Predictive Modeling – A statistical technique used to predict future outcomes based on historical data and patterns, often employed in forecasting, risk assessment, and decision-making.

Data Warehouse – A centralized repository that stores structured and processed data from multiple sources for reporting, analysis, and business intelligence purposes.

Data Lakehouse – A unified architecture that combines the storage and processing capabilities of data lakes with the structured querying and ACID transactions of data warehouses, providing a versatile platform for modern data analytics.

Cloud-Native Technologies – Software tools and architectures designed specifically to leverage the advantages of cloud computing, including scalability, resilience, and agility.

Microservices – A software development approach that structures an application as a collection of loosely coupled, independently deployable services, each responsible for a specific business function.

Serverless Computing – A cloud computing execution model where the cloud provider dynamically manages the allocation and provisioning of servers, allowing developers to focus on writing code without worrying about server management.

Structured Query Language (SQL) – A domain-specific language used for managing and manipulating relational databases, including tasks such as querying data, updating records, and managing database schema.

Data Governance – The process of managing the availability, usability, integrity, and security of data across an organization, ensuring compliance with regulatory requirements and alignment with business objectives.